4. Testing Mode

Testing Mode in OpenSesame allows you to evaluate your AI agent’s responses and actions in real time. This feature lets you interact with your agent by sending prompts one at a time, helping you refine its performance and improve accuracy based on immediate feedback.Key Features

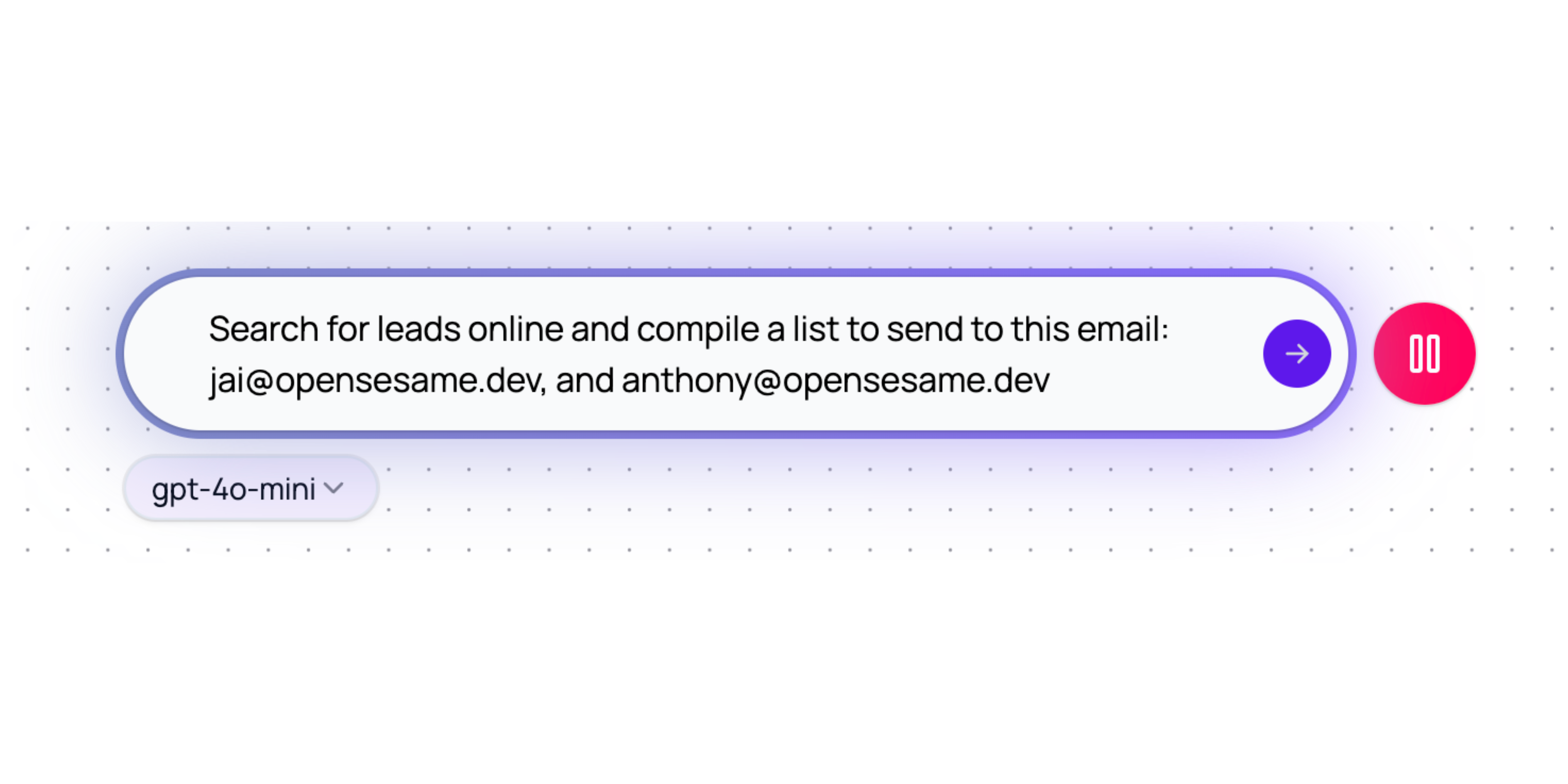

- Real-Time Testing: Activate Testing Mode by clicking the test button on the upper right side of the entry box. This mode lets you input prompts or actions for your agent to process individually, allowing you to see each response in real-time.

- Step-by-Step Evaluation: Testing Mode is designed to test actions individually, giving you a clear view of how your agent processes each prompt. This lets you make adjustments on the spot to improve accuracy or adjust the agent’s behaviour.

- Memory: We’re added memory that will allow your agent to retain context across multiple prompts, so it remembers what your request was the previous time you sent in a request.